ANTLR 3

by

R. Mark Volkmann, Partner/Software Engineer

Object Computing, Inc. (OCI)

翻譯者:Morya

Preface

前言

ANTLR is a big topic, so this is a big article. The table of contents that follows contains hyperlinks to allow easy navigation to the many topics discussed. Topics are introduced in the order in which understanding them is essential to the example code that follows. Your questions and feedback are welcomed at mark@ociweb.com.

ANTLR 是一個很大的話題,所以,這篇也有點長。 下面這個列表里面包含了一些鏈接,它們指向本頁其它位置,以方便瀏覽。 各個專題以一個在看過示例后能迅速理解的方式排列介紹。 有任何問題或者反饋,都歡迎致信 mark@ociweb.com 。

Table of Contents

內容列表

- Part I - Overview

- Part II - Jumping In

- Part III - Lexers

|

- Part IV - Parsers

- Part V - Tree Parsers

- Part VI - ANTLRWorks

- Part VII - Putting It All Together

- Part VIII - Wrap Up

|

Part I - Overview

ANTLR is a free, open source parser generator tool that is used to implement "real" programming languages and domain-specific languages (DSLs). The name stands for ANother Tool for Language Recognition. Terence Parr, a professor at the University of San Francisco, implemented (in Java) and maintains it. It can be downloaded from http://www.antlr.org. This site also contains documentation, articles, examples, a Wiki and information about mailing lists.

ANTLR 是一個免費,開源的解析器生成工具,它被用來實現“真正的”編程語言,和特殊語法語言(DSLs)。 ANTLR是 ANother Tool for Language Recognition 的縮寫。 圣弗朗西斯科大學教授Terence Parr,(用Java) 實現并維護著這個工具。 下載地址:http://www.antlr.org。 這個站點有相關的文檔、文章、示例,郵件列表,還有一個維基。

Many people feel that ANTLR is easier to use than other, similar tools. One reason for this is the syntax it uses to express grammars. Another is the existence of a graphical grammar editor and debugger called ANTLRWorks. Jean Bovet, a former masters student at the University of San Francisco who worked with Terence, implemented (using Java Swing) and maintains it.

很多人都認為 ANTLR 比同類工具更具可用性。 其中一個原因在于它描述 grammar 的語法。 另一個是圖形化的,可調試的 ANTLRWorks 文法編輯器的存在。 它由 Jean Bovet 使用 Java(Swing) 實現并維護。 他是在圣弗朗西斯科大學和Terence共事的一位former masters ?學生。

A brief word about conventions in this article... ANTLR grammar syntax makes frequent use of the characters [ ] and { }. When describing a placeholder we will use italics rather than surrounding it with { }. When describing something that's optional, we'll follow it with a question mark rather than surrounding it with [ ].

本文使用的標記符轉換簡略介紹... ANTLR 文法文件的語法,對 [ ] 和 { } 使用的比較頻繁。 當描述一個占位符的時候,我們使用斜體字而不是把它用 { } 括起來。 描述可選部分的時候,我們使用 ? 后綴而不是用 [ ] 括起來。

ANTLR uses Extended Backus-Naur (EBNF) grammars which can directly express optional and repeated elements. BNF grammars require a more verbose syntax to express these. EBNF grammars also support "subrules" which are parenthesized groups of elements.

ANTLR 使用 Extended Backus-Naur 擴展巴克斯標記式 (EBNF) 文法,它可以直接表述 “可選”, “重復”元素。而 BNF 文法則需要更繁瑣的語法來表達。 EBNF 文法也支持括號包含的元素組的子規則。

ANTLR supports infinite lookahead for selecting the rule alternative that matches the portion of the input stream being evaluated. The technical way of stating this is that ANTLR supports LL(*). An LL(k) parser is a top-down parser that parses from left to right, constructs a leftmost derivation of the input and looks ahead k tokens when selecting between rule alternatives. The * means any number of lookahead tokens. Another type of parser, LR(k), is a bottom-up parser that parses from left to right and constructs a rightmost derivation of the input. LL parsers can't handle left-recursive rules so those must be avoided when writing ANTLR grammars. Most people find LL grammars easier to understand than LR grammars. See Wikipedia for a more detailed descriptions of LL and LR parsers.

當多個規則符合輸入的一部分內容時,ANTLR 支持無窮前看,以消除歧義。 用術語說就是 ANTLR 支持 LL(*)。 LL(k) parser 是一個自頂向下 parser ,它從左到右解析, 構建一個輸入的最左推導,當遇到多個規則選擇時,前看n個詞素來決定。 * 代表前看任一個詞素。 另一種類型的parser,LR(k),自底向上 parser,從左到右解析,并且構建 一個輸入的最右推導。 LL parsers 不能處理左遞歸規則,在寫Antlr文法的時一定要避免。 多數人覺得 LL 文法比 LR 文法更容易理解。詳細參考:維基百科 LL 和 LR 解析器。

ANTLR supports three kinds of predicates that aid in resolving ambiguities. These allow rules that are not based strictly on input syntax.

ANTLR 支持三種斷言來解決歧義。 它們允許不是嚴格基于輸入語法的規則。

While ANTLR is implemented in Java, it generates code in many target languages including Java, Ruby, Python, C, C++, C# and Objective C.

雖然 ANTLR 使用 Java 編寫,它支持多種目標語言,包括 Java, Ruby, Python, C, C++, C# 和 Objective C。

There are IDE plug-ins available for working with ANTLR inside IDEA and Eclipse, but not yet for NetBeans or other IDEs.

IDEA 和 Eclipse 有相關的插件來支持 ANTLR,NetBeans 等 IDE 暫時還沒有。

There are three primary use cases for ANTLR.

ANTLR 有三種主要的使用方法。

The first is implementing "validators." These generate code that validates that input obeys grammar rules.

第一種是實現“驗證器”。 它檢驗輸入文本是否符合文法規定的規則。

The second is implementing "processors." These generate code that validates and processes input. They can perform calculations, update databases, read configuration files into runtime data structures, etc. Our Math example coming up is an example of a processor.

第二種是實現 “處理器”。 它檢驗并處理輸入文本。 可以進行計算,更新數據庫,讀取配置文件到內存中,等。 我們后面的Math示例就是一個處理器的例子。

The third is implementing "translators." These generate code that validates and translates input into another format such as a programming language or bytecode.

第三種就是“翻譯器”。 它驗證輸入,并將輸入翻譯成另一種格式,比如編程語言或字節碼。

Later we'll discuss "actions" and "rewrite rules." It's useful to point out where these are used in the three use cases above. Grammars for validators don't use actions or rewrite rules. Grammars for processors use actions, but not rewrite rules. Grammars for translators use actions (containing printlns) and/or rewrite rules.

晚點我們會討論 “動作” 和 “重寫規則”。 當然,明確這三種在何種情況使用會利于理解。 驗證器不使用 “動作”和 “重寫規則”。 處理器使用“動作”,但不使用“重寫規則”。 翻譯器使用“動作”,可能使用“重寫規則”。 (包含 printlns)

Dynamic languages like Ruby and Groovy can be used to implement many DSLs. However, when they are used, the DSLs have to live within the syntax rules of the language. For example, such DSLs often require dots between object references and method names, parameters separated by commas, and blocks of code surrounded by curly braces or do/end keywords. Using a tool like ANTLR to implement a DSL provides maximum control over the syntax of the DSL.

待翻譯…… 我也不懂……

- Lexer

- converts a stream of characters to a stream of tokens (ANTLR token objects know their start/stop character stream index, line number, index within the line, and more)

- 把字符流轉換成詞素流, (ANTLR 詞素對性知道它們自己的 start/stop 索引,行號,行中位置,等)

- Parser

- processes a stream of tokens, possibly creating an AST

- 處理詞素流輸入,并生成AST(可選)

- Abstract Syntax Tree (AST)

- an intermediate tree representation of the parsed input that is simpler to process than the stream of tokens and can be efficiently processed multiple times

- 輸入流的樹表示格式,它比詞素流更方便處理,且可以高效的多次處理。

- Tree Parser

- processes an AST

- StringTemplate

- a library that supports using templates with placeholders for outputting text (ex. Java source code)

- 一個支持占位符模板的庫,用來輸出文本(比如 Java 源文件)

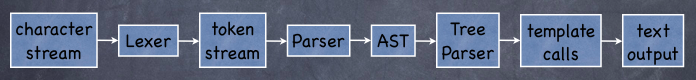

An input character stream is feed into the lexer. The lexer converts this to a stream of tokens that is feed to the parser. The parser often constructs an AST which is fed to the tree parser. The tree parser processes the AST and optionally produces text output, possibly using StringTemplate.

字符流送到 lexer,lexer 將它們轉換到詞素流,然后送到 parser。 paser 常常建立一個 AST ,并送到tree parser。 tree paser 處理 AST ,可能還會使用字符串模板生成文本輸出。

The general steps involved in using ANTLR include the following.

使用 ANTLR 大致有以下幾步。

- Write the grammar using one or more files.

A common approach is to use three grammar files, each focusing on a specific aspect of the processing. The first is the lexer grammar, which creates tokens from text input. The second is the parser grammar, which creates an AST from tokens. The third is the tree parser grammar, which processes an AST. This results in three relatively simple grammar files as opposed to one complex grammar file.

- 把文法定義寫入一個或多個文件中

一個通常的做法是使用三個文法文件,每個單獨處理一個方面。 第一個是掃描器定義,從字符輸入建立詞素流。 第二個是解析器定義,從詞素流建立AST。 第三個是樹解析器定義,處理AST輸入。 這樣會產生三個相關的,但簡化的文法文件,而不是一個單獨的復雜大文件。

- Optionally write StringTemplate templates for producing output.

- 【可選】為輸出編寫字符串模板

- Debug the grammar using ANTLRWorks.

- 使用 ANTLRWorks 調試文法

- Generate classes from the grammar. These validate that text input conforms to the grammar and execute target language "actions" specified in the grammar.

- 從文法定義生成相關的處理類 這些類會驗證輸入文本是否符合文法定義并執行目標語言文法中指定的“動作”。

- Write an application that uses the the generated classes.

- 使用生成的類完成完整程序。

- Feed the application text that conforms to the grammar.

- 給程序輸入符合文法的文件。

Part II - Jumping In

Enough background information, let's create a language!

現在已經有足夠的背景信息,我們來創建一個語言吧!

Here's a list of features we want our language to have:

下面是對我們的語言的功能期待:

- run on a file or interactively

- 可以執行一個文件或者交互

- get help using

? or help

- 可以用

? 或 help 取得幫助

- support a single data type: double

- 支持double數據類型

- assign values to variables using syntax like

a = 3.14

- 可以使用類似

a = 3.14 的語法對一個變量進行賦值

- define polynomial functions using syntax like

f(x) = 3x^2 - 4x + 2

- 使用如下語法定義一個多項式函數

f(x) = 3x^2 - 4x + 2

- print strings, numbers, variables and function evaluations using syntax like

print "The value of f for " a " is " f(a)

- 使用如下語法打印字符串,數值,變量,函數的求值

print "The value of f for " a " is " f(a)

- print the definition of a function and its derivative using syntax like

print "The derivative of " f() " is " f'()

- 使用如下語法打印一個函數的定義和導函數

print "The derivative of " f() " is " f'()

- list variables and functions using

list variables and list functions

- 使用如下語法列表變量和函數

list variables 和 list functions

- add and subtract functions using syntax like

h = f - g

(note that the variables used in the functions do not have to match)

- 使用如下的語法進行函數的加減

h = f - g

(注意,函數中使用的變量不需要是一個變量實例)

- exit using

exit or quit

- 使用

exit 或 quit 退出程序

Here's some example input.

下面是輸入示例

a = 3.14

f(x) = 3x^2 - 4x + 2

print "The value of f for " a " is " f(a)

print "The derivative of " f() " is " f'()

list variables

list functions

g(y) = 2y^3 + 6y - 5

h = f + g

print h()

Here's the output that would be produced.

下面是理論輸出

The value of f for 3.14 is 19.0188

The derivative of f(x) = 3x^2 - 4x + 2 is f'(x) = 6x - 4

# of variables defined: 1

a = 3.14

# of functions defined: 1

f(x) = 3x^2 - 4x + 2

h(x) = 2x^3 + 3x^2 + 2x - 3

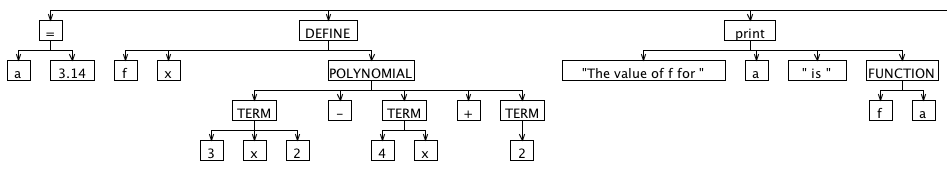

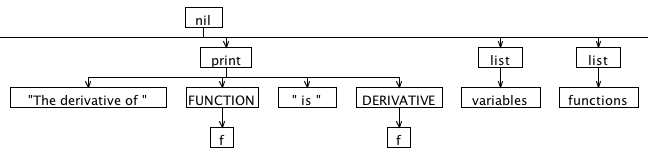

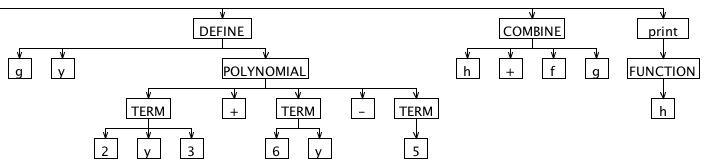

Here's the AST we'd like to produce for the input above, drawn by ANTLRWorks. It's split into three parts because the image is really wide. The "nil" root node is automatically supplied by ANTLR. Note the horizontal line under the "nil" root node that connects the three graphics. Nodes with uppercase names are "imaginary nodes" added for the purpose of grouping other nodes. We'll discuss those in more detail later.

這里是我們將會從輸入生成的AST,使用 ANTLRWorks 繪出。 它被分成三塊,因為圖片太寬了。 根節點 "nil" 由 ANTLR 自動提供。 注意,根節點 "nil" 下面的豎線是連著三張圖片的………… 名字大寫的節點是 “虛擬節點”。 為把其它節點分類而加。 我們晚點會詳細討論。

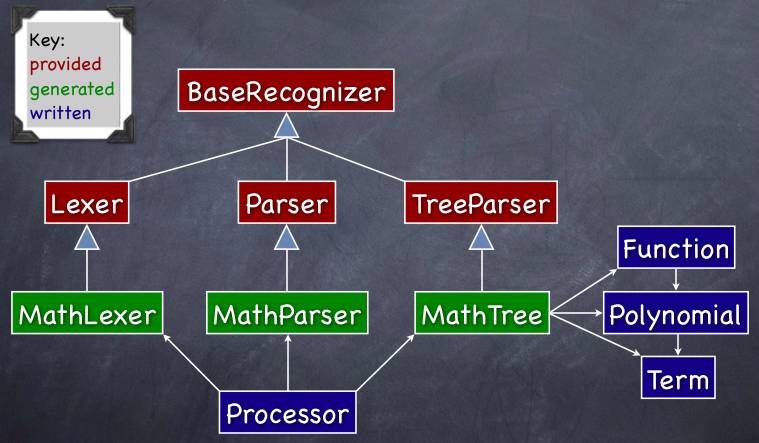

The diagram below shows the relationships between the most important classes used in this example.

下面的圖片展示了本示例中用到的幾個最重要的類之間的關系。

Note the key in the upper-left corner of the diagram that distinguishes between classes provided by ANTLR, classes generated from our grammar by ANTLR, and classes we wrote manually.

注意,在圖片左上角的 key,指出了 ANTLR 提供的類,文法定義自動生成的類,和我們自己寫的類。

The syntax of an ANTLR grammar is described below.

ANTLR grammar 文件的語法在下面描述

grammar-type? grammar grammar-name;

grammar-options?

token-spec?

attribute-scopes?

grammar-actions?

rule+

Comments in an ANTLR grammar use the same syntax as Java. There are three types of grammars: lexer, parser and tree. If a grammar-type isn't specified, it defaults to a combined lexer and parser. The name of the file containing the grammar must match the grammar-name and have a ".g" extension. The classes generated by ANTLR will contain a method for each rule in the grammar. Each of the elements of grammar syntax above will be discussed in the order they are needed to implement our Math language.

ANTLR grammar 使用和Java相同的注釋語法。( /* bb */ //aa ) grammar有三種: lexer, parser 和 tree。 如果為指定 grammar-type ,默認為 lexer 和 parser 混合 grammar 。 包含 grammar 的文件,其文件名必須和 grammar-name 完全一致(注意大小寫), 而且,擴展名為 ".g" 。 ANTLR 生成的類會為文法里的每一個規則生成一個對應的函數。 上面討論的語法元素,在需要實現Math語言而用到的時候,會引入詳細說明。(唉,組織不好)

Grammar options include the following:

grammar option包含下面幾個:

- AST node type -

ASTLabelType = CommonTree

- This is used in grammars that create or parse ASTs. CommonTree is a provided class. It is also possible to use your own class to represent AST nodes.

- 在生成或解析 AST 的 grammars 中使用。 CommonTree 是 ANTLR 內置的一個類。 也可以使用自定義的類來表述 AST 節點。

- infinite lookahead -

backtrack = true

- 無限前看 -

backtrack = true

- This provides infinite lookahead for all rules. Parsing is slower with this on.

- 對所有的規則提供無限前看。 開啟后解析速度會變慢。

- limited lookahead -

k = integer

- 有限前看 -

k = integer

- This limits lookahead to a given number of tokens.

- 設定前看 k 個詞素

- output type -

output = AST | template

- 輸出類型 -

output = AST | template

- Choose

template when using the StringTemplate library.

Don't set this when not producing output or doing so with printlns in actions.

- 使用StringTemplate的話,就選擇

template

如果不進行輸出,或者使用 println 進行輸出就不要設置此選項。

- token vocabulary -

tokenVocab = grammar-name

- This allows one grammar file to use tokens defined in another (with lexer rules or a token spec.). It reads generated

.token files.

- 允許讀取另一個 grammar 文件定義的詞素。 (with lexer rules or a token spec.). 它讀取生成的

.token 文件。

Grammar options are specified using the following syntax. Note that quotes aren't needed around single word values.

Grammar option 使用如下語法來指定。 注意,引用(我也不曉得)不需要用單引號包含。

options {

name = 'value';

...

}

Grammar actions add code to the generated code. There are three places where code can be added by a grammar action.

Grammar aciton 會在生成的代碼里添加一些內容。 用 grammar action 可以添加代碼到三個地方。

- Before the generated class definition:

This is commonly used to specify a Java package name and import classes in other packages. The syntax for adding code here is @header { ... }. In a combined lexer/parser grammar, this only affects the generated parser class. To affect the generated lexer class, use @lexer::header { ... }.

- 在生成類的定義位置前:

通常用于指定 Java package 或者 import 其它 package 里的 class。 使用語法 @header { ... }。 在混合的 lexer/parser grammar 內,這樣只會影響生成的parser類。 要對 lexer 類也起作用,需要使用 @lexer::header { ... }。

- Inside the generated class definition:

This is commonly used to define constants, attributes and methods accessible to all rule methods in the generated classes. It can also be used to override methods in the superclasses of the generated classes.

The syntax for adding code here is @members { ... }. In a combined lexer/parser grammar, this only affects the generated parser class. To affect the generated lexer class, use @lexer::members { ... }.

- 在生成類的定義內:

通常用于定義常數,屬性或者一些可以訪問所有 rule 生成函數的方法。 也可以用來重載生成類超類的函數。

使用語法 @members { ... }。 在混合的 lexer/parser grammar 內,這樣只會影響生成的parser類。 要對 lexer 類也起作用,需要使用 @lexer::members { ... }。

- Inside generated methods:

The catch blocks for the try block in the methods generated for each rule can be customized. One use for this is to stop processing after the first error is encountered rathering than attempting to recover by skipping unrecognized tokens.

The syntax for adding catch blocks is @rulecatch { catch-blocks }

- 在生成的函數內:

每個規則函數的異常處理塊都可以自定義。 其中一個用處就是,當遇到第一個錯誤的時候,就停止,而不是掠過無法識別的詞素嘗試恢復。

使用語法 @rulecatch { catch-blocks }

Part III - Lexers

A lexer rule or token specification is needed for every kind of token to be processed by the parser grammar. The names of lexer rules must start with an uppercase letter and are typically all uppercase. A lexer rule can be associated with:

一個 parser 需要處理的 token 必須要有相應的規則或 token 規格。 laxer 規則名必須以大寫字母開頭,最好是按慣例全部大寫。 一個 lexer 規則可有以下幾種

- a single literal string expected in the input

- 期待一個單獨的文本字符串

- a selection of literal strings that may be found

- 可選的文本字符串

- a sequence of specific characters and ranges of characters using the cardinality indicators ?, * and +

- 一串特定順序的字符范圍和數量指定符 ?, *,+

A lexer rule cannot be associated with a regular expression.

lexer rule 不能用 正則表達式 指定。

When the lexer chooses the next lexer rule to apply, it chooses the one that matches the most characters. If there is a tie then the one listed first is used, so order matters.

當 lexer 發現輸入流符合同時符合兩個 rule,它會選擇匹配更長的。 如果有兩個都匹配輸入,先出現在 grammar 中的會被匹配。所以,順序前后是有差別的。

A lexer rule can refer to other lexer rules. Often they reference "fragment" lexer rules. These do not result in creation of tokens and are only present to simplify the definition of other lexer rules. In the example ahead, LETTER and DIGIT are fragment lexer rules.

lexer rule 可以引用其它 lexer rule。 經常它們引用 "fragment" lexer rule。 那些 rule 不產生實際的 token 標記,它們只是為了簡化定義 lexer rule 而存在。 在前面的 example,LETTER 和 DIGIT 是 fragment lexer rule。

Whitespace and comments in the input are handled in lexer rules. There are two common options for handling these: either throw them away or write them to a different "channel" that is not automatically inspected by the parser. To throw them away, use "skip();". To write them to the special "hidden" channel, use "$channel = HIDDEN;".

輸入中的空白字符和注釋在 lexer rule 里處理。 有兩個常用的 option 來處理這些: 干脆丟棄,或者輸入到另一個 parser 不會自動關注的 "channel"。 丟棄的話,使用 "skip();" 寫入 "hidden" 通道: "$channel = HIDDEN;" 。

Here are examples of lexer rules that handle whitespace and comments.

下面是一些處理空白和注釋的 lexer 規則例子。

// Send runs of space and tab characters to the hidden channel.

WHITESPACE: (' ' | '\t')+ { $channel = HIDDEN; };

// Treat runs of newline characters as a single NEWLINE token.

// On some platforms, newlines are represented by a \n character.

// On others they are represented by a \r and a \n character.

NEWLINE: ('\r'? '\n')+;

// Single-line comments begin with //, are followed by any characters

// other than those in a newline, and are terminated by newline characters.

SINGLE_COMMENT: '//' ~('\r' | '\n')* NEWLINE { skip(); };

// Multi-line comments are delimited by /* and */

// and are optionally followed by newline characters.

MULTI_COMMENT options { greedy = false; }

: '/*' .* '*/' NEWLINE? { skip(); };

When the greedy option is set to true, the lexer matches as much input as possible. When false, it stops when input matches the next element in the lexer rule. The greedy option defaults to true except when the patterns ".*" and ".+" are used. For this reason, it didn't need to be specified in the example above.

當開啟貪婪模式的時候, lexer 會盡可能多的匹配字符。 關閉時,找到 lexer rule 里規定的下一個元素就會停止。 貪婪模式默認開啟,除非使用了 ".*" 和 ".+" 模式。 因此,在上面的例子中,不需要再特別指定。

If newline characters are to be used as statement terminators then they shouldn't be skipped or hidden since the parser needs to see them.

如果換行作為描述塊的結束符,不要丟棄或隱藏它,因為 parser 需要用到。

lexer grammar MathLexer;

// We want the generated lexer class to be in this package.

@header { package com.ociweb.math; }

APOSTROPHE: '\''; // for derivative

ASSIGN: '=';

CARET: '^'; // for exponentiation

FUNCTIONS: 'functions'; // for list command

HELP: '?' | 'help';

LEFT_PAREN: '(';

LIST: 'list';

PRINT: 'print';

RIGHT_PAREN: ')';

SIGN: '+' | '-';

VARIABLES: 'variables'; // for list command

NUMBER: INTEGER | FLOAT;

fragment FLOAT: INTEGER '.' '0'..'9'+;

fragment INTEGER: '0' | SIGN? '1'..'9' '0'..'9'*;

NAME: LETTER (LETTER | DIGIT | '_')*;

STRING_LITERAL: '"' NONCONTROL_CHAR* '"';

fragment NONCONTROL_CHAR: LETTER | DIGIT | SYMBOL | SPACE;

fragment LETTER: LOWER | UPPER;

fragment LOWER: 'a'..'z';

fragment UPPER: 'A'..'Z';

fragment DIGIT: '0'..'9';

fragment SPACE: ' ' | '\t';

// Note that SYMBOL does not include the double-quote character.

fragment SYMBOL: '!' | '#'..'/' | ':'..'@' | '['..'`' | '{'..'~';

// Windows uses \r\n. UNIX and Mac OS X use \n.

// To use newlines as a terminator,

// they can't be written to the hidden channel!

NEWLINE: ('\r'? '\n')+;

WHITESPACE: SPACE+ { $channel = HIDDEN; };

We'll be looking at the parser grammar soon. When parser rule alternatives contain literal strings, they are converted into references to automatically generated lexer rules. For example, we could eliminate the ASSIGN lexer rule above and change ASSIGN to '=' in the parser grammar.

我們馬上就會看到 parser 的 grammar 。 當 parser 的 規則中包含文本字符串時,它們會被自動生成轉換成 lexer rule ,然后再引用它。 比如,我們可以把上面例子里的 ASSIGN 刪掉,然后把 parser grammar 里的 ASSIGN 換成 '=' 。

Part IV - Parsers

The lexer creates tokens for all input character sequences that match lexer rules. It can be useful to create other tokens that either don't exist in the input (imaginary) or have a better name than what is found in the input. Imaginary tokens are often used to group other tokens. In the parser grammar ahead, the tokens that play this role are DEFINE, POLYNOMIAL, TERM, FUNCTION, DERIVATIVE and COMBINE.

Lexer 會為所有符合 lexer rule 的輸入字符流創建詞素。 下面的做法會很有用處,為輸入中并不存在的單元創建詞素,或使用比輸入中出現的更好理解的名字。 虛擬詞素經常被用來合并其它的詞素。 在后面的 parser grammar 中,扮演這種角色的有 DEFINE, POLYNOMIAL, TERM, FUNCTION, DERIVATIVE 和 COMBINE。

The syntax for specifying these kinds of tokens in a parser grammar is:

在 parser grammar 中指定這種類型的詞素的語法如下:

tokens {

imaginary-name;

better-name = 'input-name';

}

The syntax for defining rules is

定義一個 rule 的語法

fragment? rule-name arguments?

(returns return-values)?

throws-spec?

rule-options?

rule-attribute-scopes?

rule-actions?

: token-sequence-1

| token-sequence-2

...

;

exceptions-spec?

The fragment keyword only appears at the beginning of lexer rules that are used as fragments (described earlier).

關鍵詞 fragment 只會出現在 lexer 規則前面,它們會被作為 fragment (前面描述過了)。

Rule options include backtrack and k which customize those options for a specific rule instead of using the grammar-wide values specified as grammar options. They are specified using the syntax options { ... }.

規則配置里面包含 backtrack 和 k 會把它們指定的規則 rule 用當前值來替代全局 grammar 級指定的值。它們使用下面的語法來指定 options { ... }。

The token sequences are alternatives that can be selected by the rule. Each element in the sequences can be followed by an action which is target language code (such as Java) in curly braces. The code is executed immediately after a preceding element is matched by input.

詞素順序列被用來選擇各個規則。序列中的每一個元素都可以附加一個 action,它被用{ } 包圍的目標語言(比如 Java)編寫。在之前的元素被輸入流匹配之后該代碼會立即被執行。

The optional exceptions-spec customizes exception handling for this rule.

可選的 exceptions-spec 此規則的異常處理。

Elements in a token sequence can be assigned to variables so they can be accessed in actions. To obtain the text value of a token that is referred to by a variable, use $variable.text. There are several examples of this in the parser grammar that follows.

一個詞素中的元素可以被賦值給變量以利于它們可以被 action 訪問。要讀取一個變量指向詞素的文本值,要使用 $variable.text。后面的幾個例子中都有這種使用情況。

Creating ASTs

Parser grammars often create ASTs. To do this, the grammar option output must be set to AST.

Parser grammar 經常創建 AST。這樣的話,grammar option output 必須被設置成 AST。

There are two approaches for creating ASTs. The first is to use "rewrite rules". These appear after a rule alternative. This is the recommended approach in most cases. The syntax of a rewrite rule is

-> ^(parent child-1 child-2 ... child-n)

有兩種辦法來創建 AST。第一種是使用 "rewrite rules"。這種出現在一個規則的分支后面。這是大多數情況的推薦做法。Rewrite rule 語法 -> ^(parent child-1 child-2 ... child-n)

The second approach for creating ASTs is to use AST operators. These appear in a rule alternative, immediately after tokens. They work best for sequences like mathematical expressions. There are two AST operators. When a ^ is used, a new root node is created for all child nodes at the same level. When a ! is used, no node is created. This is often used for bits of syntax that aren't needed in the AST such as parentheses, commas and semicolons. When a token isn't followed by one of them, a new child node is created for that token using the current root node as its parent.

第二種創建AST語法樹的辦法是使用 AST 操作符。它們出現在一個規則的分支中間,緊跟詞素后面。在數學表達式中最適用。 有兩種 AST 操作符。使用 ^ 時,一個新的父(?不確定)節點會被創建給所用同一等級的子節點。使用 ! 時,不創建節點。這種經常用在一些不需要出現在 AST 中的語法片段,比如括號、逗號、和分號。當一個詞素后面沒有跟任何后綴時,默認創建一個當前根節點的子節點。

A rule can use both of these approaches, but each rule alternative can only use one approach.

一個規則可以同時使用兩種方法,但對于一個規則分支只能使用一種。

The following syntax is used to declare rule arguments and return types.

下面的語法用來聲明規則參數和返回值。

rule-name[type1 name1, type2 name2, ...]

returns [type1 name1, type2 name2, ...] :

...

;

The names after the rule name are arguments and the names after the returns keyword are return values.

規則名后面的名字是參數類型和參數變量名,而 returns 關鍵字后面的則是返回值類型和返回值變量名。

Note that rules can return more than one value. ANTLR generates a class to use as the return type of the generated method for the rule. Instances of this class hold all the return values. The generated method name matches the rule name. The name of the generated return type class is the rule name with "_return" appended.

注意,規則可以返回多個值。 ANTLR 會生成一個類用來做該規則生成方法的返回值類型。該類的實例則持有所有返回值。規則生成的方法名,或稱函數名和該規則名完全一致。 而生成的返回值類型名則會是規則名后加上"_return"。

我們的解析器定義如下

parser grammar MathParser;

options {

// We're going to output an AST.

output = AST;

// We're going to use the tokens defined in our MathLexer grammar.

tokenVocab = MathLexer;

}

// These are imaginary tokens that will serve as parent nodes

// for grouping other tokens in our AST.

tokens {

COMBINE;

DEFINE;

DERIVATIVE;

FUNCTION;

POLYNOMIAL;

TERM;

}

// We want the generated parser class to be in this package.

@header { package com.ociweb.math; }

// This is the "start rule".

// EOF is a predefined token that represents the end of input.

// The "start rule" should end with this.

// Note the use of the ! AST operator

// to avoid adding the EOF token to the AST.

script: statement* EOF!;

statement: assign | define | interactiveStatement | combine | print;

// These kinds of statements only need to be supported

// when reading input from the keyboard.

interactiveStatement: help | list;

// Examples of input that match this rule include

// "a = 19", "a = b", "a = f(2)" and "a = f(b)".

assign: NAME ASSIGN value terminator -> ^(ASSIGN NAME value);

value: NUMBER | NAME | functionEval;

// A parenthesized group in a rule alternative is called a "subrule".

// Examples of input that match this rule include "f(2)" and "f(b)".

functionEval

: fn=NAME LEFT_PAREN (v=NUMBER | v=NAME) RIGHT_PAREN -> ^(FUNCTION $fn $v);

// EOF cannot be used in lexer rules, so we made this a parser rule.

// EOF is needed here for interactive mode where each line entered ends in EOF

// and for file mode where the last line ends in EOF.

terminator: NEWLINE | EOF;

// Examples of input that match this rule include

// "f(x) = 3x^2 - 4" and "g(x) = y^2 - 2y + 1".

// Note that two parameters are passed to the polynomial rule.

define

: fn=NAME LEFT_PAREN fv=NAME RIGHT_PAREN ASSIGN

polynomial[$fn.text, $fv.text] terminator

-> ^(DEFINE $fn $fv polynomial);

// Examples of input that match this rule include

// "3x2 - 4" and "y^2 - 2y + 1".

// fnt = function name text; fvt = function variable text

// Note that two parameters are passed in each invocation of the term rule.

polynomial[String fnt, String fvt]

: term[$fnt, $fvt] (SIGN term[$fnt, $fvt])*

-> ^(POLYNOMIAL term (SIGN term)*);

// Examples of input that match this rule include

// "4", "4x", "x^2" and "4x^2".

// fnt = function name text; fvt = function variable text

term[String fnt, String fvt]

// tv = term variable

: c=coefficient? (tv=NAME e=exponent?)?

// What follows is a validating semantic predicate.

// If it evaluates to false, a FailedPredicateException will be thrown.

// It is testing whether the term variable matches the function variable.

{ tv == null ? true : ($tv.text).equals($fvt) }?

-> ^(TERM $c? $tv? $e?)

;

// This catches bad function definitions such as

// f(x) = 2y

catch [FailedPredicateException fpe] {

String tvt = $tv.text;

String msg = "In function \"" + fnt +

"\" the term variable \"" + tvt +

"\" doesn't match function variable \"" + fvt + "\".";

throw new RuntimeException(msg);

}

coefficient: NUMBER;

// An example of input that matches this rule is "^2".

exponent: CARET NUMBER -> NUMBER;

// Inputs that match this rule are "?" and "help".

help: HELP terminator -> HELP;

// Inputs that match this rule include

// "list functions" and "list variables".

list

: LIST listOption terminator -> ^(LIST listOption);

// Inputs that match this rule are "functions" and "variables".

listOption: FUNCTIONS | VARIABLES;

// Examples of input that match this rule include

// "h = f + g" and "h = f - g".

combine

: fn1=NAME ASSIGN fn2=NAME op=SIGN fn3=NAME terminator

-> ^(COMBINE $fn1 $op $fn2 $fn3);

// An example of input that matches this rule is

// print "f(" a ") = " f(a)

print

: PRINT printTarget* terminator -> ^(PRINT printTarget*);

// Examples of input that match this rule include

// 19, 3.14, "my text", a, f(), f(2), f(a) and f'().

printTarget

: NUMBER -> NUMBER

| sl=STRING_LITERAL -> $sl

| NAME -> NAME

// This is a function reference to print a string representation.

| NAME LEFT_PAREN RIGHT_PAREN -> ^(FUNCTION NAME)

| functionEval

| derivative

;

// An example of input that matches this rule is "f'()".

derivative

: NAME APOSTROPHE LEFT_PAREN RIGHT_PAREN -> ^(DERIVATIVE NAME);

Part V - Tree Parsers

Part V - 樹解析器

Rule actions add code before and/or after the generated code in the method generated for a rule. They can be used for AOP-like wrapping of methods. The syntax @init { ...code... } inserts the contained code before the generated code. The syntax @after { ...code... } inserts the contained code after the generated code. The tree grammar rules polynomial and term ahead demonstrate using @init.

Rule Action 可以在自動生成的規則函數代碼前或者后面加入自定義代碼。 可以被用在 AOP 類似的包裝函數中。 下面的語法 @init { ...code... } 會在生成代碼前面加入其中包含的代碼。 語法 @after { ...code... } 則會在生成代碼后面加上其中包含的代碼。 前面的樹文法規則 polynomial 和 term 演示了 @init 的使用。

Data is shared between rules in two ways: by passing parameters and/or returning values, or by using attributes. These are the same as the options for sharing data between Java methods in the same class. Attributes can be accessible to a single rule (using @init to declare them), a rule and all rules invoked by it (rule scope), or by all rules that request the named global scope of the attributes.

有兩種辦法在規則之間共享數據:傳遞參數或者返回值,或使用屬性。和 Java 在同一個類內部共享數據的方案一樣。 Attributes can be accessible to a single rule (using @init to declare them), a rule and all rules invoked by it (rule scope), or by all rules that request the named global scope of the attributes.

Attribute scopes define collections of attributes that can be accessed by multiple rules. There are two kinds, global and rule scopes.

屬性域定義其它規則可以訪問的各種屬性。有兩種全局和規則級的屬性域。

Global scopes are named scopes that are defined outside any rule. To request access to a global scope within a rule, add scope name; to the rule. To access multiple global scopes, list their names separated by spaces. The following syntax is used to define a global scope.

全局屬性域定義的屬性在任何規則(函數)之外。在規則(函數)內訪問全局屬性,,需要給規則添加 scope name; 。 要訪問多個全局屬性域, 列出所有用空格分隔的名字。下面的語法用來定義一個全局屬性域。

scope name {

type variable;

}

Rule scopes are unnamed scopes that are defined inside a rule. Rule actions in the defining rule and rules invoked by it access attributes in the scope with $rule-name::variable . The following syntax is used to define a rule scope.

規則域是未命名的域,在規則內部定義。 當前定義的規則的 action 中和當前規則應用的規則,使用如下語法訪問屬性 $rule-name::variable 。 下面的語法用來定義一個規則域。

scope {

type variable;

}

}

To initialize an attribute, use an @init rule action.

要初始化一個屬性,使用規則 action @init 。

tree grammar MathTree;

options {

// We're going to process an AST whose nodes are of type CommonTree.

ASTLabelType = CommonTree;

// We're going to use the tokens defined in

// both our MathLexer and MathParser grammars.

// The MathParser grammar already includes

// the tokens defined in the MathLexer grammar.

tokenVocab = MathParser;

}

@header {

// We want the generated parser class to be in this package.

package com.ociweb.math;

import java.util.Map;

import java.util.TreeMap;

}

// We want to add some fields and methods to the generated class.

@members {

// We're using TreeMaps so the entries are sorted on their keys

// which is desired when listing them.

private Map<String, Function> functionMap = new TreeMap<String, Function>();

private Map<String, Double> variableMap = new TreeMap<String, Double>();

// This adds a Function to our function Map.

private void define(Function function) {

functionMap.put(function.getName(), function);

}

// This retrieves a Function from our function Map

// whose name matches the text of a given AST tree node.

private Function getFunction(CommonTree nameNode) {

String name = nameNode.getText();

Function function = functionMap.get(name);

if (function == null) {

String msg = "The function \"" + name + "\" is not defined.";

throw new RuntimeException(msg);

}

return function;

}

// This evaluates a function whose name matches the text

// of a given AST tree node for a given value.

private double evalFunction(CommonTree nameNode, double value) {

return getFunction(nameNode).getValue(value);

}

// This retrieves the value of a variable from our variable Map

// whose name matches the text of a given AST tree node.

private double getVariable(CommonTree nameNode) {

String name = nameNode.getText();

Double value = variableMap.get(name);

if (value == null) {

String msg = "The variable \"" + name + "\" is not set.";

throw new RuntimeException(msg);

}

return value;

}

// This just shortens the code for print calls.

private static void out(Object obj) {

System.out.print(obj);

}

// This just shortens the code for println calls.

private static void outln(Object obj) {

System.out.println(obj);

}

// This converts the text of a given AST node to a double.

private double toDouble(CommonTree node) {

double value = 0.0;

String text = node.getText();

try {

value = Double.parseDouble(text);

} catch (NumberFormatException e) {

throw new RuntimeException("Cannot convert \"" + text + "\" to a double.");

}

return value;

}

// This replaces all escaped newline characters in a String

// with unescaped newline characters.

// It is used to allow newline characters to be placed in

// literal Strings that are passed to the print command.

private static String unescape(String text) {

return text.replaceAll("\\\\n", "\n");

}

} // @members

script: statement*;

statement: assign | combine | define | interactiveStatement | print;

// These kinds of statements only need to be supported

// when reading input from the keyboard.

interactiveStatement: help | list;

// This adds a variable to the map.

// Parts of rule alternatives can be assigned to variables (ex. v)

// that are used to refer to them in rule actions.

// Alternatively rule names (ex. NAME) can be used.

// We could have used $value in place of $v below.

assign: ^(ASSIGN NAME v=value) { variableMap.put($NAME.text, $v.result); };

// This returns a value as a double.

// The value can be a number, a variable name or a function evaluation.

value returns [double result]

: NUMBER { $result = toDouble($NUMBER); }

| NAME { $result = getVariable($NAME); }

| functionEval { $result = $functionEval.result; }

;

// This returns the result of a function evaluation as a double.

functionEval returns [double result]

: ^(FUNCTION fn=NAME v=NUMBER) {

$result = evalFunction($fn, toDouble($v));

}

| ^(FUNCTION fn=NAME v=NAME) {

$result = evalFunction($fn, getVariable($v));

}

;

// This builds a Function object and adds it to the function map.

define

: ^(DEFINE name=NAME variable=NAME polynomial) {

define(new Function($name.text, $variable.text, $polynomial.result));

}

;

// This builds a Polynomial object and returns it.

polynomial returns [Polynomial result]

// The "current" attribute in this rule scope is visible to

// rules invoked by this one, such as term.

scope { Polynomial current; }

@init { $polynomial::current = new Polynomial(); }

// There can be no sign in front of the first term,

// so "" is passed to the term rule.

// The coefficient of the first term can be negative.

// The sign between terms is passed to

// subsequent invocations of the term rule.

: ^(POLYNOMIAL term[""] (s=SIGN term[$s.text])*) {

$result = $polynomial::current;

}

;

// This builds a Term object and adds it to the current Polynomial.

term[String sign]

@init { boolean negate = "-".equals(sign); }

: ^(TERM coefficient=NUMBER) {

double c = toDouble($coefficient);

if (negate) c = -c; // applies sign to coefficient

$polynomial::current.addTerm(new Term(c));

}

| ^(TERM coefficient=NUMBER? variable=NAME exponent=NUMBER?) {

double c = coefficient == null ? 1.0 : toDouble($coefficient);

if (negate) c = -c; // applies sign to coefficient

double exp = exponent == null ? 1.0 : toDouble($exponent);

$polynomial::current.addTerm(new Term(c, $variable.text, exp));

}

;

// This outputs help on our language which is useful in interactive mode.

help

: HELP {

outln("In the help below");

outln("* fn stands for function name");

outln("* n stands for a number");

outln("* v stands for variable");

outln("");

outln("To define");

outln("* a variable: v = n");

outln("* a function from a polynomial: fn(v) = polynomial-terms");

outln(" (for example, f(x) = 3x^2 - 4x + 1)");

outln("* a function from adding or subtracting two others: " +

"fn3 = fn1 +|- fn2");

outln(" (for example, h = f + g)");

outln("");

outln("To print");

outln("* a literal string: print \"text\"");

outln("* a number: print n");

outln("* the evaluation of a function: print fn(n | v)");

outln("* the defintion of a function: print fn()");

outln("* the derivative of a function: print fn'()");

outln("* multiple items on the same line: print i1 i2  in");

in");

outln("");

outln("To list");

outln("* variables defined: list variables");

outln("* functions defined: list functions");

outln("");

outln("To get help: help or ?");

outln("");

outln("To exit: exit or quit");

}

;

// This lists all the functions or variables that are currently defined.

list

: ^(LIST FUNCTIONS) {

outln("# of functions defined: " + functionMap.size());

for (Function function : functionMap.values()) {

outln(function);

}

}

| ^(LIST VARIABLES) {

outln("# of variables defined: " + variableMap.size());

for (String name : variableMap.keySet()) {

double value = variableMap.get(name);

outln(name + " = " + value);

}

}

;

// This adds or substracts two functions to create a new one.

combine

: ^(COMBINE fn1=NAME op=SIGN fn2=NAME fn3=NAME) {

Function f2 = getFunction(fn2);

Function f3 = getFunction(fn3);

if ("+".equals($op.text)) {

// "$fn1.text" is the name of the new function to create.

define(f2.add($fn1.text, f3));

} else if ("-".equals($op.text)) {

define(f2.subtract($fn1.text, f3));

} else {

// This should never happen since SIGN is defined to be either "+" or "-".

throw new RuntimeException(

"The operator \"" + $op +

" cannot be used for combining functions.");

}

}

;

// This prints a list of printTargets then prints a newline.

print

: ^(PRINT printTarget*)

{ System.out.println(); };

// This prints a single printTarget without a newline.

// "out", "unescape", "getVariable", "getFunction", "evalFunction"

// and "toDouble" are methods we wrote that were defined

// in the @members block earlier.

printTarget

: NUMBER { out($NUMBER); }

| STRING_LITERAL {

String s = unescape($STRING_LITERAL.text);

out(s.substring(1, s.length() - 1)); // removes quotes

}

| NAME { out(getVariable($NAME)); }

| ^(FUNCTION NAME) { out(getFunction($NAME)); }

// The next line uses the return value named "result"

// from the earlier rule named "functionEval".

| functionEval { out($functionEval.result); }

| derivative

;

// This prints the derivative of a function.

// This also could have been done in place in the printTarget rule.

derivative

: ^(DERIVATIVE NAME) {

out(getFunction($NAME).getDerivative());

}

;

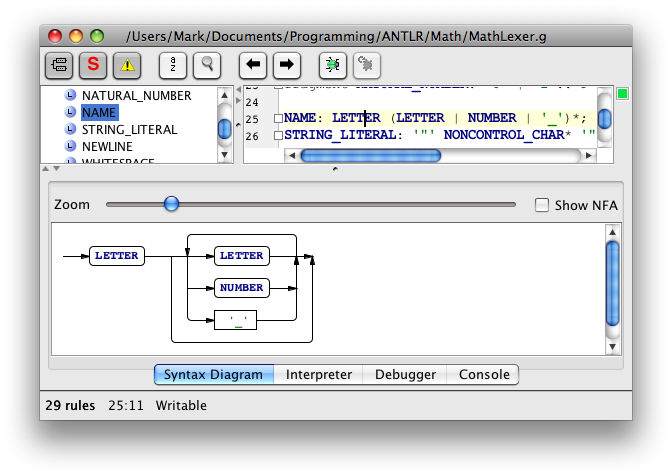

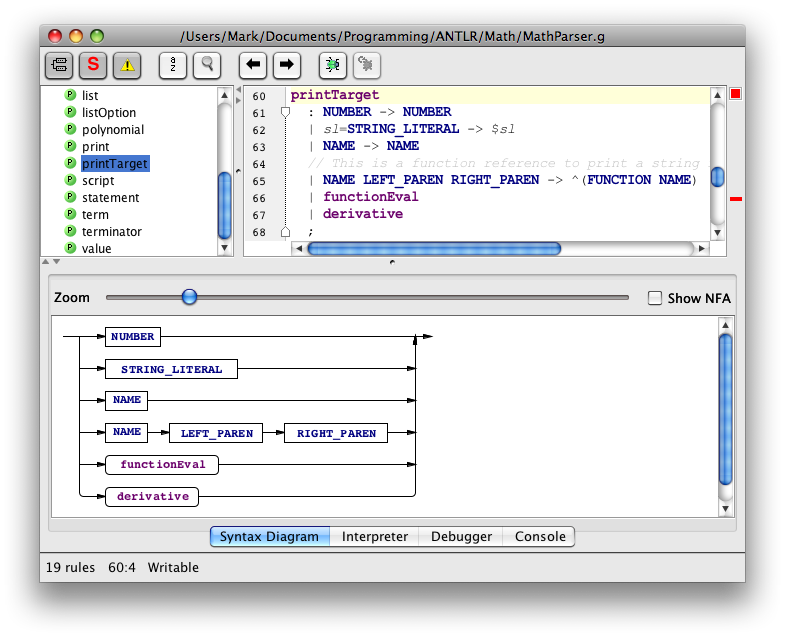

ANTLRWorks is a graphical grammar editor and debugger. It checks for grammar errors, including those beyond the syntax variety such as conflicting rule alternatives, and highlights them. It can display a syntax diagram for a selected rule. It provides a debugger that can step through creation of parse trees and ASTs.

ANTLRWorks 是一個圖形化的文法編輯器和調試器。它可以檢查文法的錯誤,甚至不是的語法上的而是邏輯錯誤,比如互相沖突的規則分支,然后高亮它們。 它可以給選中的規則顯示一個語法圖。

Rectangles in syntax diagrams correspond to fixed vocabulary symbols. Rounded rectangles correspond to variable symbols.

語法圖中的方框對應于確定的字符。圓角矩形對應于不定的字符。

Here's an example of a syntax diagram for a selected lexer rule.

下面是一個選中規則的語法圖示例。

Here's an example of a syntax diagram for a selected parser rule.

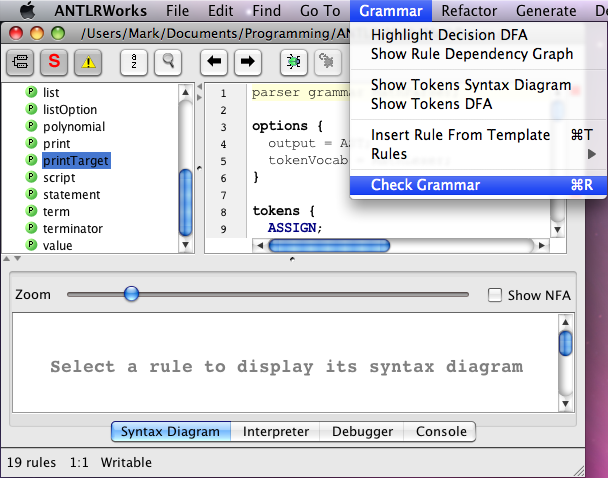

Here's an example of requesting a grammar check, followed by a successful result.

下面是檢查文法的例子,文法是正確的。

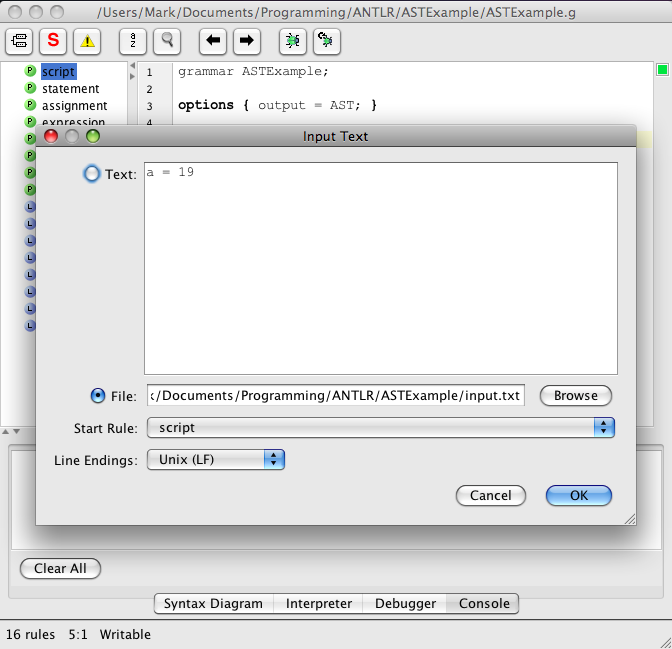

Using the ANTLRWorks debugger is simple when the lexer and parser rules are combined in a single grammar file, unlike our example. Press the Debug toolbar button (with a bug on it), enter input text or select an input file, select the start rule (allows debugging a subset of the grammar) and press the OK button. Here's an example of entering the input for a different, simpler grammar that defines the lexer and parser rules in a single file:

當 lexer 和 parser 的規則合并癥一個文法文件中時,使用 ANTLRWorks 調試器會非常簡單。 不像我們的例子, 按下工具欄的調試按鈕(上面有一個蟲子的 ), 鍵入輸入文本或選擇一個文件, 選中開始規則 (也可以只是調試一些規則)然后點 OK 按鈕。 下面的例子展示了給一個簡單點的文法輸入文本,其 lexer 和 parser 規則都在一個文件中:

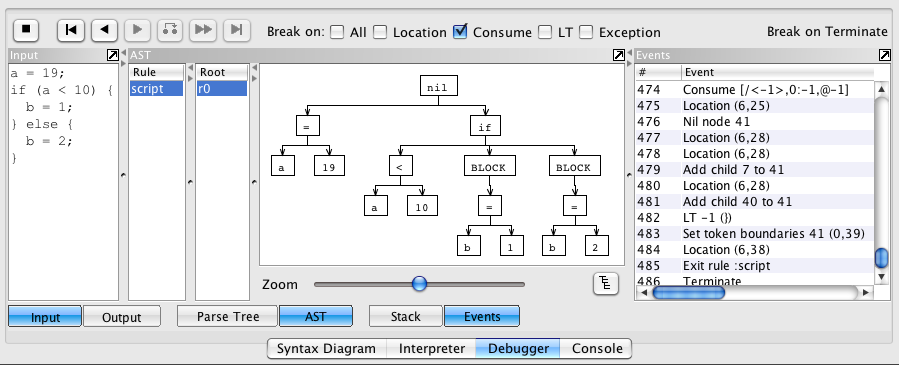

The debugger controls and output are displayed at the bottom of the ANTLRWorks window. Here's an example using that same, simpler grammar:

調試器的控件和輸出都在 ANTLRWorks 窗口的底部, 下面是使用上一個簡單文法的例子:

Using the debugger when the lexer and parser rules are in separate files, like in our example, is a bit more complicated. See the ANTLR Wiki page titled "When do I need to use remote debugging."

像我們的例子這樣, lexer 和 parser 規則在不同的文件中,使用調試器會稍微復雜一點。 請看 ANTLR Wiki 頁,標題 "When do I need to use remote debugging"。

Part VII - Putting It All Together

Part VII - 組裝所有部件!

終于翻到這里了。。

Next we need to write a class to utilize the classes generated by ANTLR. We'll call ours Processor. This class will use MathLexer (extends Lexer), MathParser (extends Parser) and MathTree (extends TreeParser). Note that the clases Lexer, Parser and TreeParser all extend the class BaseRecognizer. Our Processor class will also use other classes we wrote to model our domain. These classes are named Term, Function and Polynomial. We'll support two modes of operation, batch and interactive.

下面我們將要寫一個類來調用 ANTLR 自動生成的類。 我們叫它 Processor 。 這個類會使用 MathLexer (繼承自 Lexer), MathParser (繼承自 Parser) 和 MathTree (繼承自 TreeParser)。 注意,所有 Lexer , Parser 和 TreeParser 都繼承自類 BaseRecognizer 。 我們的 Processor 類也會使用一些其它的類,那些用來組成我們整個架構的類。 這些類是 Term , Function 和 Polynomial 。 我們將會支持兩種模式的操作,批處理模式和交互式。

Here's our Processor class.

下面是我們的 Processor 類。

package com.ociweb.math;

import java.io.*;

import java.util.Scanner;

import org.antlr.runtime.*;

import org.antlr.runtime.tree.*;

public class Processor {

public static void main(String[] args) throws IOException, RecognitionException {

if (args.length == 0) {

new Processor().processInteractive();

} else if (args.length == 1) { // name of file to process was passed in

new Processor().processFile(args[0]);

} else { // more than one command-line argument

System.err.println("usage: java com.ociweb.math.Processor [file-name]");

}

}

private void processFile(String filePath) throws IOException, RecognitionException {

CommonTree ast = getAST(new FileReader(filePath));

//System.out.println(ast.toStringTree()); // for debugging

processAST(ast);

}

private CommonTree getAST(Reader reader) throws IOException, RecognitionException {

MathParser tokenParser = new MathParser(getTokenStream(reader));

MathParser.script_return parserResult =

tokenParser.script(); // start rule method

reader.close();

return (CommonTree) parserResult.getTree();

}

private CommonTokenStream getTokenStream(Reader reader) throws IOException {

MathLexer lexer = new MathLexer(new ANTLRReaderStream(reader));

return new CommonTokenStream(lexer);

}

private void processAST(CommonTree ast) throws RecognitionException {

MathTree treeParser = new MathTree(new CommonTreeNodeStream(ast));

treeParser.script(); // start rule method

}

private void processInteractive() throws IOException, RecognitionException {

MathTree treeParser = new MathTree(null); // a TreeNodeStream will be assigned later

Scanner scanner = new Scanner(System.in);

while (true) {

System.out.print("math> ");

String line = scanner.nextLine().trim();

if ("quit".equals(line) || "exit".equals(line)) break;

processLine(treeParser, line);

}

}

// Note that we can't create a new instance of MathTree for each

// line processed because it maintains the variable and function Maps.

private void processLine(MathTree treeParser, String line) throws RecognitionException {

// Run the lexer and token parser on the line.

MathLexer lexer = new MathLexer(new ANTLRStringStream(line));

MathParser tokenParser = new MathParser(new CommonTokenStream(lexer));

MathParser.statement_return parserResult =

tokenParser.statement(); // start rule method

// Use the token parser to retrieve the AST.

CommonTree ast = (CommonTree) parserResult.getTree();

if (ast == null) return; // line is empty

// Use the tree parser to process the AST.

treeParser.setTreeNodeStream(new CommonTreeNodeStream(ast));

treeParser.statement(); // start rule method

}

} // end of Processor class

Ant is a great tool for automating tasks used to develop and test grammars. Suggested independent "targets" include the following.

Ant 工具可以很好的幫助開發和調試文法的自動化任務。 支持下面每一個“目標”。

- Use

org.antlr.Tool to generate Java classes and ".tokens" files from each grammar file.

- ".tokens" files assign integer constants to token names and are used by

org.antlr.Tool when processing subsequent grammar files.

- The "uptodate" task can be used to determine whether the grammar has changed since the last build.

- The "unless" target attribute can be used to avoid running

org.antlr.Tool if the grammar hasn't changed since the last build.

- 使用

org.antlr.Tool 來從每一個文法文件中生成 Java 類和 ".tokens" 文件。

- ".tokens" 給 token 綁定一個常量整數, 且

org.antlr.Tool 處理后續的文法文件時會用到它。

- "uptodate" 任務可以用來確定文法在上次構建后是否有所改變。

- "unless" 任務屬性可以用來避免在上次構建后文法沒有改變而

org.antlr.Tool 依然運行。

- Compile Java source files.

- 編譯 Java 源文件。

- Run automated tests.

- 執行自動測試。

- Run the application using a specific file as input.

- 使用特定文件作為輸入來運行程序。

- Delete all generated files (clean target).

- 刪除所有自動生成的文件 (清理目標)。

For examples of all of these, download the source code from the URL listed at the end of this article and see the build.xml file.

比如,對于所有這些任務,可以從文章末尾列出的URL下載源文件然后查看 build.xml 文件。

Part VIII - Wrap Up

By default the parser only processes tokens from the default channel. It can however request tokens from other channels such as the hidden channel. Tokens are assigned unique, sequential indexes regardless of the channel to which they are written. This allows parser code to determine the order in which the tokens were encountered, regardless of the channel to which they were written.

解析器默認只處理默認通道里的 token 。 它也可以從其它通道比如隱藏通道,里請求 token 。 Token 會被按連續順序,不管它將要被寫入哪個通道,賦給一個唯一的索引值。 這樣可以允許解析器確定 token 被發現的順序(不管他們要被寫入哪個通道)。

Here are some related public constants and methods from the Token class.

下面是一些 Token 類中相關的公有常量和函數。

static final int DEFAULT_CHANNEL

static final int HIDDEN_CHANNEL

int getChannel() This gets the number of the channel where this Token was written. 取得此 Token 要被寫入的通道數

int getTokenIndex() This gets the index of this Token. 取得當前 Token 的索引

Here are some related public methods from the CommonTokenStream class, which implements the TokenStream interface.

下面是一些 CommonTokenStream 類相關的公有函數,它實現了 TokenStream 接口。

Token get(int index) This gets the Token found at a given position in the input. 取得輸入中 index 位置的 Token

List getTokens(int start, int stop) This gets a List of Tokens found between given positions in the input. 取得輸入中指定一個區間的一串 Token

int index() This gets the index of the last Token that was read. 取得最近一次讀取的 Token 的index

We have demonstrated the basics of using ANTLR. For information on advanced topics, see the slides from the presentation on which this article was based at http://www.ociweb.com/mark/programming/ANTLR3.html. This web page contains links to the slides and the code presented in this article. The advanced topics covered in these slides include the following.

我們已經展示了 ANTLR 基本使用方法。 一些高階內容方面的信息,可以看本文所基于內容的幻燈片,地址: http://www.ociweb.com/mark/programming/ANTLR3.html 。 這個頁面包含了幻燈片的鏈接和本文展示的代碼。 這些幻燈片涵蓋了下面幾個高階部分

- remote debugging

- using the StringTemplate library

- details on the use of lookahead in grammars

- three kinds semantic predicates: validating, gated and disambiguating

- syntactic predicates

- customizing error handling

- gUnit grammar unit testing framework

- 遠程調試

- 使用 StringTemplate 庫

- 在文法中使用前看的詳細信息

- 三種語義斷言: validating, gated 和 disambiguating

- 語法斷言

- 自定義錯誤處理

- gUnit grammar 單元測試框架

Many programming languages have been implemented using ANTLR. These include Boo, Groovy, Mantra, Nemerle and XRuby.

很都編程語言都被用 ANTLR 重新實現了。 包括 Boo, Groovy, Mantra, Nemerle 和 XRuby 。

Many other kinds of tools use ANTLR in their implementation. These include Hibernate (for its HQL to SQL query translator), Intellij IDEA, Jazillian (translates COBOL, C and C++ to Java), JBoss Rules (was Drools), Keynote (from Apple), WebLogic (from Oracle), and many more.

很多其它類的工具也使用了 ANTLR 。 包括 Hibernate ( HQL 到 SQL 查詢轉換器部分), Intellij IDEA, Jazillian (把 COBOL, C 和 C++ 翻譯成 Java), JBoss Rules (曾名 Drools), Keynote (源自 Apple), WebLogic (源自 Oracle), 等等等等。

Currrently only one book on ANTLR is available. Terence Parr, creator of ANTLR, wrote "The Definitive ANTLR Reference" It is published by "The Pragmatic Programmers." Terence is working on a second book for the same publisher that may be titled "ANTLR Recipes."

當前, ANTLR 書只有一本。(不知道現在咋樣了。。 ) Terence Parr, ANTLR 作者,編寫的 "The Definitive ANTLR Reference" 《ANTLR 權威參考手冊?》由 "The Pragmatic Programmers" 出版。 Terence 正在致力于幫該出版社寫另一本可能會命名為 "ANTLR 秘訣"。

There you have it! ANTLR is a great tool for generating custom language parsers. We hope this article will make it easier to get started creating validators, processors and translators.

你已成佛! ANTLR 是一個非常好的生成特制語言解析器的工具。 我們希望本文讓開始創建 驗證器,處理器和翻譯器 的過程變簡單些了。