Introduction

The Intel TBB was released by Intel during their movement to enhance system performance by cores, rather than by Gigahertz. It was meant for their use, but they released a trial download and an open source edition. It will scale to function as a powerful tool for those going into parallel programming and multi-core programming. Note that some powerful sample code can be downloaded from http://www.threadingbuildingblocks.org/.

Preface

Sometimes, the terms concurrency and parallelism are used interchangeably. But a multithreaded application can have threads execute simultaneously while residing on one physical chip. Parallelism, however, is achieved only when those concurrent threads are executing on separate cores of a multicore chip. When we write an algorithm to use concurrency, our most important design choice should be to figure out how to partition the original problem into individual sub-parts. Because most programs spend a lot of their execution time running loops over an iteration range, we should standardize the use of the Intel TBB. For example, loops which use C++ STL iterators loop over the contents of a collection of data. This iteration space must be determined before we can parallelize those loops. IntelTBB is a C++ template-based library for loop-level parallelism that concentrates on defining tasks rather than explicit threads. The components of TBB include generic parallel algorithms, concurrent containers, low-level synchronization primitives, and a task scheduler. At the time of this writing, TBB 3.0 is the most recent version. Programmers usingTBB can parallelize the execution of loop iterations by treating chunks of iterations as tasks and allowing the TBBtask scheduler to determine the task sizes, number of threads to use assignment of tasks to those threads, and how those threads are scheduled for execution. The task scheduler will give precedence to tasks that have been most recently in a core with the idea of making best use of the cache that likely contains the task’s data. When thinking tasks as opposed to threads, we should be concerned about performance, load balance between different sub-problems, data sharing, control, and data dependencies among the sub-problems.

The task scheduler utilizes a task-stealing mechanism to load balance the execution. Furthermore, breaking a program into separate functional blocks and assigning a separate thread to each block is a solution that usually does not scale well because, typically, the number of functional blocks is fixed. In contrast, Threading Building Blocks emphasizes data-parallel programming, enabling multiple threads to work most efficiently together. Data-parallel programming scales well to larger numbers of processors by dividing a data set into smaller pieces. Breaking apart a function into smaller bits of work will limit scalability and possibly render a performance hit, as the number of methods in a program is rarely dynamic. Taking a data parallelism approach resolves that issue by breaking the iteration space into independent units of work. Any thread that uses an algorithm template from the library or the task scheduler must have an initialized tbb::task_scheduler_init object. A thread may have more than one of these objects initialized at a time. The task scheduler shuts down when all task_scheduler_init objects terminate. By default, the constructor for task_scheduler_init does the initialization and the destructor does the termination. Thus, declaring a task_scheduler_init in main( ), as in the example shown below, both starts and shuts down the scheduler.

#include "tbb/task_scheduler_init.h"

using namespace tbb;

int main( ) {

task_scheduler_init init;

...

return 0;

}The parallel_for template parallelizes tasks that are contained within a for loop. The template requires two parameters: a range type over which to iterate and a body type that executes iterations over the range or a subrange. The range class must define a copy constructor and a destructor, the methods is_empty() (which returns TRUE if the range is empty) and is_divisible() (which returns TRUE if the range can be split), and a splitting constructor (to divide the range in half). A partitioner class object can be used to heuristically find the smallest number of iterations that should be assigned. The TBB library contains two predefined range types:blocked_range and blocked_range2D. These ranges are used for single- and two dimensional ranges, respectively. The body class must define a copy constructor and a destructor as well as the operator(). Theoperator() will contain a copy of the original serial loop that has been modified to run over a subrange of values that come from the range type. The parallel_reduce template will iterate over a range and combine partial results computed by each task into a final (reduction) value. The range type for parallel_reduce has the same requirements as parallel_for. The body type needs a splitting constructor and a join method. The splitting constructor in the body copies read-only data required to run the loop body and to assign the identity element of the reduction operation that initializes the reduction variable. The join method combines partial results of tasks based on the reduction operation being used. So having that, let’s look at an example.

A Simple Example of Parallel Sum

The parallel sum operation can work with any associative and commutative combining operation. For example, the operations of multiplication, maximum, minimum, and some logical operations would all be appropriate. In a serial program, the commutative property of the operation is not important, since the order with which the serial execution combines the elements is the same each and every time. Nevertheless, to have any chance of performing the computation concurrently, we rely on this property of the operation, since concurrent execution does not impose a fixed schedule on the order of independent computations.

#include <cstdio>

#include <cstdlib>

#include <cstring>

#include <ctype.h>

#include "parallel_reduce.h"

#include "blocked_range.h"

#include "task_scheduler_init.h"

#include "tick_count.h"

using namespace tbb;

#define AUTO_GRAIN

struct Sum {

float value;

Sum() : value(0) {}

Sum( Sum& s, split ) {value = 0;}

void operator()( const blocked_range<float*>& range ) {

float temp = value;

for( float* a=range.begin(); a!=range.end(); ++a ) {

temp += *a;

}

value = temp;

}

void join( Sum& rhs ) {value += rhs.value;}

};

float ParallelSum( float array[], size_t n ) {

Sum total;

#ifndef AUTO_GRAIN

parallel_reduce( blocked_range<float*>( array, array+n, 1000 ),

total );

#else

parallel_reduce( blocked_range<float*>( array, array+n ),

total, auto_partitioner() );

#endif

return total.value;

}

const int N = 1000000;

static int MaxThreads = 4;

static bool VerboseFlag = false;

static void ParseCommandLine( int argc, char* argv[] ) {

int i = 1;

if( i<argc )="=0" verboseflag="true;"><argc>

<argc maxthreads="strtol(argv[i++],0,0);" i="0;">< N; ++i ) {

input[i] = (float)(rand() % 10);

}

if( VerboseFlag ) {

printf(" Input: ");

for ( size_t i = 0; i < 7; ++i ) {

printf("%7.2f ", input[i]);

}

printf("...\n");

}

for( int p=1; p<=MaxThreads; ++p ) {

task_scheduler_init init(p);

tick_count t0 = tick_count::now();

float output = ParallelSum(input, N);

tick_count t1 = tick_count::now();

if( VerboseFlag ) {

printf("Output: %7.2f\n", output);

}

printf("%2d threads: %.3f msec\n", p, (t1-t0).seconds()*1000);

}

return 0;

}This Intel code was compiled via the Intel C++ Professional Compiler, a compiler that when installed, integrates into Microsoft Visual Studio 2008 or 2010. Once the installation is completed, then it is time to install the performance library Intel TBB 3.0. The included documentation will step you through configuring the environment in order for the compiler to reference the include header files and the Lib files. Notice the results:

1 threads: 6.513 msec

2 threads: 3.333 msec

3 threads: 3.496 msec

4 threads: 3.345 msec

It turns out that executing 4 threads in parallel via the Intel TBB takes the time that the execution of one thread does. Another performance gain is how the Intel TBB library manages to keep things thread-safe. After ascertaining that the body is safe to run in parallel, then the simplest approach to parallelizing the loop would be to divide the size of the loop (the upper range – lowest range – assuming that the iterations are in ascending order) by the number of processors. This approach however, is best left to the task scheduler. A simple division process could easily lead to an inefficient use those processors.

Now consider a composite integer, composite meaning that the integer is either odd or even. We know that a prime number is divisible by itself and one. But any composite integer can be broken down into a factor of primes. Therefore, calculating and then locating the number of primes in a range of integers would involve a continual computational procedure. Examine this code below:

#include <cctype>

#include <cstdlib>

#include <cstdio>

#include <cstring>

#include <math.h>

#include <cassert>

#include "parallel_reduce.h"

#include "task_scheduler_init.h"

#include "tick_count.h"

using namespace std;

using namespace tbb;

typedef unsigned long Number;

static bool PrintPrimes = false;

static Number GrainSize = 1000;

class Multiples {

inline Number strike( Number start,

Number limit,

Number stride ) {

bool* is_composite = my_is_composite;

assert( stride>=2 );

for(start =0;start < limit; start+="stride" i+="2" +="m&1;" i="3;"

n_factor="0;"

my_factor="new" my_striker="new"

my_is_composite="new"

m="Number(sqrt(double(n)));"

is_composite[start]="true;" k="0;"

is_composite="my_is_composite;" my_factor[n_factor++]="i;"

my_striker[n_factor]="strike(" As we would expect, the higher degree of parallelism we go, the quicker the computation outputs its results:

#primes from [2..100000000] = 5761455 (1.10 sec with serial code)

#primes from [2..100000000] = 5761455 (1.10 sec with 1-way parallelism)

#primes from [2..100000000] = 5761455 (0.59 sec with 2-way parallelism)

#primes from [2..100000000] = 5761455 (0.57 sec with 3-way parallelism)

#primes from [2..100000000] = 5761455 (0.60 sec with 4-way parallelism)

Where Are We Now?

Up to this point, we have been noting that sometimes part of a computation involves computing the summation of a large set of values. If multiple processors are available, instead of adding the values together sequentially, the set can be partitioned and the summations of the subsets computed simultaneously, each on a different processor. The partial sums are then combined to get the final answer. Thus, using multiple processors to compute in parallel may allow us to obtain a solution sooner. Also, if each processor has its own memory, partitioning the data between the processors may allow larger problems to be handled than could be handled on a single processor. So if we wanted to form a set of integers that start at 1, and goes from 1/1 +n.. we could add them but the sequence would not end at the number 2. The sequence would be an infinite set of fractional values that approach to 2 as a limit, never actually equaling 2. But the more values that we add, the smaller they become.

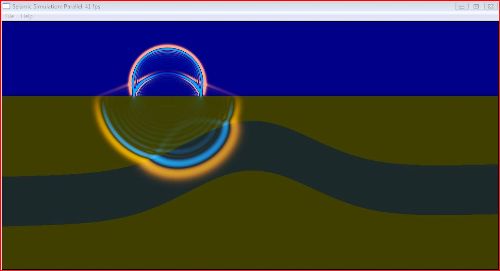

Here is a simple seismic wave simulation (wave propagation) based on parallel_for and blocked_range. The main body of the program steps through the simulation of a seismic wave in a core loop that sets the impulse from the source of the disturbance, does the two tough computations of stress updates and velocities, and finally cleans the edges of the simulation. Seismic waves are waves of sound that travel through the core of the earth, perhaps because of the result of an earthquake or explosion. Because sound waves form a chain of waves (similar to how a train gets louder upon arrival; upon departure, the sound minimizes until it cannot be heard. That is the end of the chain of sound waves). In earlier times, scientists would blow some dynamite. With a device (similar in principle to a seismograph) that would track the directions of the sound waves. One direction meant diffraction off of a denser medium, and they learned by following those waves until diffraction would cause a change in direction. After a while, they would that one type of change in direction on the graph, mine that area, and perhaps would find salt. Sometimes they would even find oil, but their intentions were to find gold. Similarly, when a particle has been displaced, some force displaced it. It has moved in a vector path, meaning the displacement is measured by both the direction and magnitude. Tracing the path, and then plotting each point of that path on a grid would reveal where the change in direction took place.

I introduce this program because it espouses an underlying concept used in DNA sequencing, etc. in computer animation, rendering is the step where information from the animation files, such as lighting, textures, and shading, is applied to 3D models to generate the 2D image that makes up a frame of the film. Parallel computing is essential to generate the needed number of frames (24 per second) for a feature-length film. Now notice the rippling effect in the image below:

Seismic Wave Propagation

#define VIDEO_WINMAIN_ARGS

#include "../../common/gui/video.h"

#include <cstring>

#include <cstdlib>

#include <math.h

#include <cctype>

#include <cstdio>

#include <cassert>

#include "tbb/task_scheduler_init.h"

#include "tbb/blocked_range.h"

#include "tbb/parallel_for.h"

#include "tbb/tick_count.h"

using namespace std;

#ifdef _MSC_VER

#pragma warning(disable: 4068)

#endif

#define DEFAULT_NUMBER_OF_FRAMES 100

int number_of_frames = -1;

const size_t MAX_WIDTH = 1024;

const size_t MAX_HEIGHT = 512;

int UniverseHeight=MAX_HEIGHT;

int UniverseWidth=MAX_WIDTH;

typedef float value;

static value V[MAX_HEIGHT][MAX_WIDTH];

static value S[MAX_HEIGHT][MAX_WIDTH];

static value T[MAX_HEIGHT][MAX_WIDTH];

static value M[MAX_HEIGHT][MAX_WIDTH];

static value L[MAX_HEIGHT][MAX_WIDTH];

static value D[MAX_HEIGHT][MAX_WIDTH];

static tbb::affinity_partitioner Affinity;

enum MaterialType {

WATER=0,

SANDSTONE=1,

SHALE=2

};

static unsigned char Material[MAX_HEIGHT][MAX_WIDTH];

static const colorcomp_t MaterialColor[4][3] = { {96,0,0}, {0,48,48}, {32,32,23} };

static const int DamperSize = 32;

static const int ColorMapSize = 1024;

static color_t ColorMap[4][ColorMapSize];

static int PulseTime = 100;

static int PulseCounter;

static int PulseX = UniverseWidth/3;

static int PulseY = UniverseHeight/4;

static bool InitIsParallel = true;

const char *titles[2] = {"Seismic Simulation: Serial", "Seismic Simulation: Parallel"};

int threads_low = 0, threads_high = tbb::task_scheduler_init::automatic;

static void UpdatePulse() {

if( PulseCounter>0 ) {

value t = (PulseCounter-PulseTime/2)*0.05f;

V[PulseY][PulseX] += 64*sqrt(M[PulseY][PulseX])*exp(-t*t);

--PulseCounter;

}

}

static void SerialUpdateStress() {

drawing_area drawing(0, 0, UniverseWidth, UniverseHeight);

for( int i=1; i<universeheight-1; j="1;">

<universewidth-1; +="M[i][j]*(V[i][j+1]-V[i][j]);"

index="(int)(V[i][j]*(ColorMapSize/2))"><0 ) index = 0;

if( index>=ColorMapSize ) index = ColorMapSize-1;

color_t* c = ColorMap[Material[i][j]];

drawing.put_pixel(c[index]);

}

}

}

struct UpdateStressBody {

void operator()( const tbb::blocked_range<int>& range ) const {

drawing_area drawing(0, range.begin(),

UniverseWidth, range.end()-range.begin());

int i_end = range.end();

for( int y = 0, i=range.begin(); i!=i_end; ++i,y++ ) {

drawing.set_pos(1, y);

#pragma ivdep

for( int j=1; j<universewidth-1;

+="M[i][j]*(V[i][j+1]-V[i][j]);"

index="(int)(V[i][j]*(ColorMapSize/2))"><0 ) index = 0;

if( index>=ColorMapSize ) index = ColorMapSize-1;

color_t* c = ColorMap[Material[i][j]];

drawing.put_pixel(c[index]);

}

}

}

};

static void ParallelUpdateStress() {

tbb::parallel_for( tbb::blocked_range<int>( 1, UniverseHeight-1 ),

UpdateStressBody(),

Affinity );

}

static void SerialUpdateVelocity() {

for( int i=1; i<universeheight-1; j="1;">

<universewidth-1; v[i][j]="D[i][j]*(V[i][j]"><int>& range ) const {

int i_end = range.end();

for( int i=range.begin(); i!=i_end; ++i )

#pragma ivdep

for( int j=1; j<universewidth-1;

v[i][j]="D[i][j]*(V[i][j]"><int>( 1,

UniverseHeight-1 ), UpdateVelocityBody(),

Affinity );

}

void SerialUpdateUniverse() {

UpdatePulse();

SerialUpdateStress();

SerialUpdateVelocity();

}

void ParallelUpdateUniverse() {

UpdatePulse();

ParallelUpdateStress();

ParallelUpdateVelocity();

}

class seismic_video : public video

{

void on_mouse(int x, int y, int key) {

if(key == 1 && PulseCounter == 0) {

PulseCounter = PulseTime;

PulseX = x; PulseY = y;

}

}

void on_key(int key) {

key &= 0xff;

if(char(key) == ' ') InitIsParallel = !InitIsParallel;

else if(char(key) == 'p') InitIsParallel = true;

else if(char(key) == 's') InitIsParallel = false;

else if(char(key) == 'e') updating = true;

else if(char(key) == 'd') updating = false;

else if(key == 27) running = false;

title = InitIsParallel?titles[1]:titles[0];

}

void on_process() {

tbb::task_scheduler_init Init(threads_high);

do {

if( InitIsParallel )

ParallelUpdateUniverse();

else

SerialUpdateUniverse();

if( number_of_frames > 0 ) --number_of_frames;

} while(next_frame() && number_of_frames);

}

} video;

void InitializeUniverse() {

PulseCounter = PulseTime;

in order to avoid denormal waves.

for( int i=0; i<universeheight; j="0;"><universewidth;

t[i][j]="S[i][j]" i="1;"><universeheight-1; j="1;">

<universewidth-1; x="float(j-UniverseWidth/2)/

(UniverseWidth/2);" t="(value)i/UniverseHeight;"

d[i][j]="1.0;"><0.3f ) {

m = WATER;

M[i][j] = 0.125;

L[i][j] = 0.125;

} else if( fabs(t-0.7+0.2*exp(-8*x*x)+0.025*x)<=0.1 ) {

m = SHALE;

M[i][j] = 0.5;

L[i][j] = 0.6;

} else {

m = SANDSTONE;

M[i][j] = 0.3;

L[i][j] = 0.4;

}

Material[i][j] = m;

}

}

value scale = 2.0f/ColorMapSize;

for( int k=0; k<4; ++k ) {

for( int i=0; i<colormapsize; t="(i-ColorMapSize/2)*scale;" r="t">0 ? t : 0;

value b = t<0 ? -t : 0;

value g = 0.5f*fabs(t);

memcpy(c, MaterialColor[k], sizeof(c));

c[2] = colorcomp_t(r*(255-c[2])+c[2]);

c[1] = colorcomp_t(g*(255-c[1])+c[1]);

c[0] = colorcomp_t(b*(255-c[0])+c[0]);

ColorMap[k][i] = video.get_color(c[2], c[1], c[0]);

}

}

value d = 1.0;

for( int k=DamperSize-1; k>0; --k ) {

d *= 1-1.0f/(DamperSize*DamperSize);

for( int j=1; j<universewidth-1; *="d;" i="1;">

<universeheight-1; *="d;"> 1 && isdigit(argv[1][0])) {

char* end; threads_high = threads_low = (int)strtol(argv[1],&end,0);

switch( *end ) {

case ':': threads_high = (int)strtol(end+1,0,0); break;

case '\0': break;

default: printf("unexpected character = %c\n",*end);

}

}

if (argc > 2 && isdigit(argv[2][0])){

number_of_frames = (int)strtol(argv[2],0,0);

}

video.title = InitIsParallel?titles[1]:titles[0];

#ifdef _WINDOWS

#define MAX_LOADSTRING 100

TCHAR szWindowClass[MAX_LOADSTRING]; LoadStringA(video::win_hInstance,

IDC_SEISMICSIMULATION, szWindowClass, MAX_LOADSTRING);

LRESULT CALLBACK WndProc(HWND hWnd, UINT message, WPARAM wParam, LPARAM lParam);

WNDCLASSEX wcex; memset(&wcex, 0, sizeof(wcex));

wcex.lpfnWndProc = (WNDPROC)WndProc;

wcex.hIcon =

LoadIcon(video::win_hInstance, MAKEINTRESOURCE(IDI_SEISMICSIMULATION));

wcex.hCursor = LoadCursor(NULL, IDC_ARROW);

wcex.hbrBackground = (HBRUSH)(COLOR_WINDOW+1);

wcex.lpszMenuName = LPCTSTR(IDC_SEISMICSIMULATION);

wcex.lpszClassName = szWindowClass;

wcex.hIconSm = LoadIcon(video::win_hInstance, MAKEINTRESOURCE(IDI_SMALL));

video.win_set_class(wcex); video.win_load_accelerators(IDC_SEISMICSIMULATION);

#endif

if(video.init_window(UniverseWidth, UniverseHeight)) {

video.calc_fps = true;

video.threaded = threads_low > 0;

InitializeUniverse();

video.main_loop();

}

else if(video.init_console()) {

if(number_of_frames <= 0) number_of_frames = DEFAULT_NUMBER_OF_FRAMES;

if(threads_high == tbb::task_scheduler_init::automatic) threads_high = 4;

if(threads_high < threads_low) threads_high = threads_low;

for( int p = threads_low; p <= threads_high; ++p ) {

InitializeUniverse();

tbb::task_scheduler_init init(tbb::task_scheduler_init::deferred);

if( p > 0 )

init.initialize( p );

tbb::tick_count t0 = tbb::tick_count::now();

if( p > 0 )

for( int i=0; i<number_of_frames; i="0;">

<number_of_frames; t1="tbb::tick_count::now();"> 0 )

printf(" with %d way parallelism\n",p);

else

printf(" with serial version\n");

}

}

video.terminate();

return 0;

}

#ifdef _WINDOWS

LRESULT CALLBACK About(HWND hDlg, UINT message, WPARAM wParam, LPARAM lParam)

{

switch (message)

{

case WM_INITDIALOG: return TRUE;

case WM_COMMAND:

if (LOWORD(wParam) == IDOK || LOWORD(wParam) == IDCANCEL) {

EndDialog(hDlg, LOWORD(wParam));

return TRUE;

}

break;

}

return FALSE;

}

LRESULT CALLBACK WndProc(HWND hWnd, UINT message, WPARAM wParam, LPARAM lParam)

{

int wmId, wmEvent;

switch (message) {

case WM_COMMAND:

wmId = LOWORD(wParam);

wmEvent = HIWORD(wParam);

switch (wmId)

{

case IDM_ABOUT:

DialogBox(video::win_hInstance, MAKEINTRESOURCE(IDD_ABOUTBOX), hWnd,

(DLGPROC)About);

break;

case IDM_EXIT:

PostQuitMessage(0);

break;

case ID_FILE_PARALLEL:

if( !InitIsParallel ) {

InitIsParallel = true;

video.title = titles[1];

}

break;

case ID_FILE_SERIAL:

if( InitIsParallel ) {

InitIsParallel = false;

video.title = titles[0];

}

break;

case ID_FILE_ENABLEGUI:

video.updating = true;

break;

case ID_FILE_DISABLEGUI:

video.updating = false;

break;

default:

return DefWindowProc(hWnd, message, wParam, lParam);

}

break;

default:

return DefWindowProc(hWnd, message, wParam, lParam);

}

return 0;

}

#endifAdmittedly, the concept of wave propagation is difficult enough with adding both a serial and a parallel approach. But the number of professional areas that use parallel programming is astounding. This is why choosing a powerful threading library is essential. Examine the output. The image takes a few minutes to complete:

A disturbance occurs in the earth or some elastic medium and triggers a chain, or sequence of waves, waves that traveling with velocity and direction. Acceleration is not really an accurate measure. Now examine the image after around 30 seconds later. The frames second pretty much remain in the same range:

The Ray Tracer Example

The MSDN Parallel Computing Center offers a download of parallel programming examples, both in native code and managed code. The Ray Tracer examples has become famous because Ray tracing is fundamentally a “delightfully parallel problem”. Each individual pixel in the image is generated by firing an imaginary ray of light, examining the color of that ray as it bounces off of and through objects in the scene, and storing the resulting color. Every pixel is thus independent of every other pixel, allowing them all to be processed in parallel. The example can be downloaded fromhttp://code.msdn.microsoft.com/ParExtSamples. Now if we consider some underlying principles that involve medical imaging, then we could take a look at an X-Ray. An x-ray has the shortest wave length of any of the light waves that there are. An x-ray’s frequency is higher than ultra-violet waves. So when shot from an x-ray tube, the sudden burst captures solid body images. Nowadays they use gamma rays. But in any event, the resolution is always poor.

To solve this problem, models are designed to show how radiation propagates through the body. Randomly selected points within the body are assumed to emit radiation (usually a gamma ray), and the trajectory of each ray is followed. As a particle (ray) passes through the body, it is attenuated by the different organs it traverses, continuing until the particle leaves the body and hits a camera model, thereby defining a full trajectory. To create a statistically significant simulation, thousands, if not millions, of trajectories are followed. This problem can be parallelized in two ways. Because each trajectory is independent, it is possible to parallelize the application by associating each trajectory with a task or data parallelization.

So, at this point, we will conclude with a simple example of a 2-D Ray Tracer, a tea cup. The code executes by having a data file that contains the image modeling of an image. Then we execute the program and note quicker the frames per second calculations are performed, as well as the improved resolution. The code is downloadable at the top section of this article. The reader should download a trial evaluation of the Intel C++ Compiler. Once installed, you’ll see how it integrates with Visual Studio 2008 as a built-in. Next, install the Intel TBB beta 3.0 version as your threading library. After that, extract the files into a newly-made folder within your projects directory folder . But we are going to use the “nmake” utility to build the files and their executables. This will be made more clear shortly.

The command that provided the above image is:

C>\..\tachyon.tbb.exe teacup.dat

To build this executable, and other comparison executable that runs with the files held in the dat directory, then build a directory to unzip the downloadable dat.zip files. Then set the environmental variables because we are going to use “nmake” from VS Studio 2008. We first start by configuring the Intel compilers path, and then run a batch script to set the environment. Afterwards, we do the same for the Microsoft VC++ compiler and run a similar batch script to set the environment:

set PATH=%PATH%;.;c:\Program Files\Intel\compiler\11.1\065\bin\ia32

iclvars_ia32.bat

set PATH=%PATH%;.;C:\Program Files\Microsoft Visual Studio 9.0\VC\bin

vcvars32.bat

(now run nmake)

nmake.exe

The build will show balls appearing after serial and parallel executions. Thus, the files are balls.dat, teapot.dat, etc. Try and copy and then paste those .dat files into the prior directory where nmake built the executables.

References

- Intel Threading Building Blocks, by James Reiner

- Patterns of Parallel Programming by Stephen Toub